Combining LLMs with a programming language creates sophisticated applications that can answer natural language questions about data and perform complex analytical operations.

In SAS, LLMs are well integrated into SAS Viya, but can we also use LLMs in SAS 9? YES, you can!

In SAS 9, PROC HTTP can be used to make HTTP requests. This procedure returns a JSON file that can be easily accessed via the LIBNAME JSON engine.

Thanks to this, LLM APIs such as OpenAI, Mistral, or even a custom API created in FastAPI can also be queried.

Plenty of things can be done with LLMs and SAS. In this project, we selected three of them and leveraged the FastAPI web application Jef in SAS Enterprise Guide/Base to perform three actions: guess dataset names, generate SQL queries, and generate exercises.

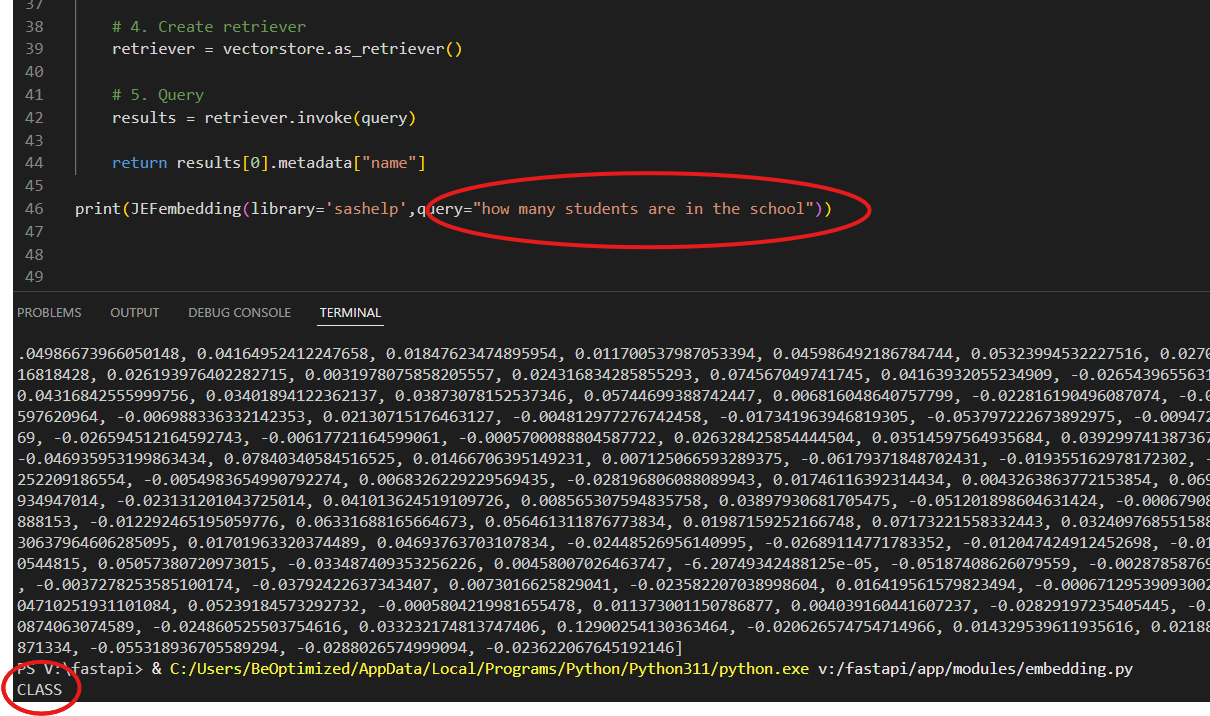

Guess dataset name: here we use LLMs together with RAG technology to guess dataset names. Behind the scenes, we converted the dataset labels of SASHELP tables into embedding vectors. Then, when we make prompts, they are also converted into vectors and compared with existing ones. When the closest vector is identified, its corresponding index (the SAS dataset name) is returned.

It might seem useless, but this technology is not based on fuzzy matching or simple substring search — it’s a semantic search algorithm available for free in all languages. Imagine a data warehouse with 50,000 tables; thanks to this technology, and based only on the labels, we could save hours of investigation...

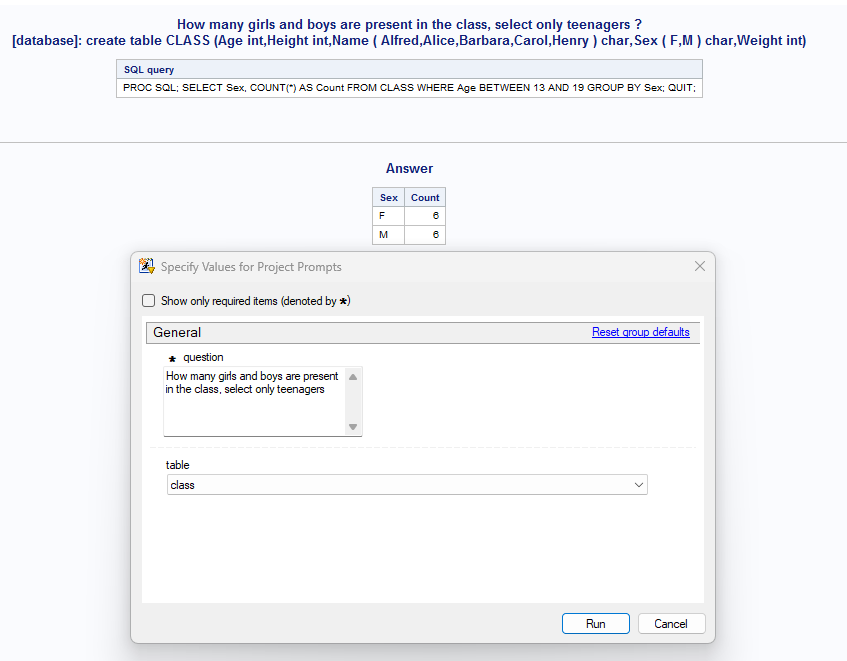

Generate SQL query: in this project, we use LLMs to generate SQL queries on the fly based on a provided input table (see “Guess dataset name”). The data records are not sent to the LLM (that would cost too much). Instead, we use PROC CONTENTS to get the table metadata (variable names and types), a small SAS macro to generate starting values for character variables, and send both as context to the LLM.

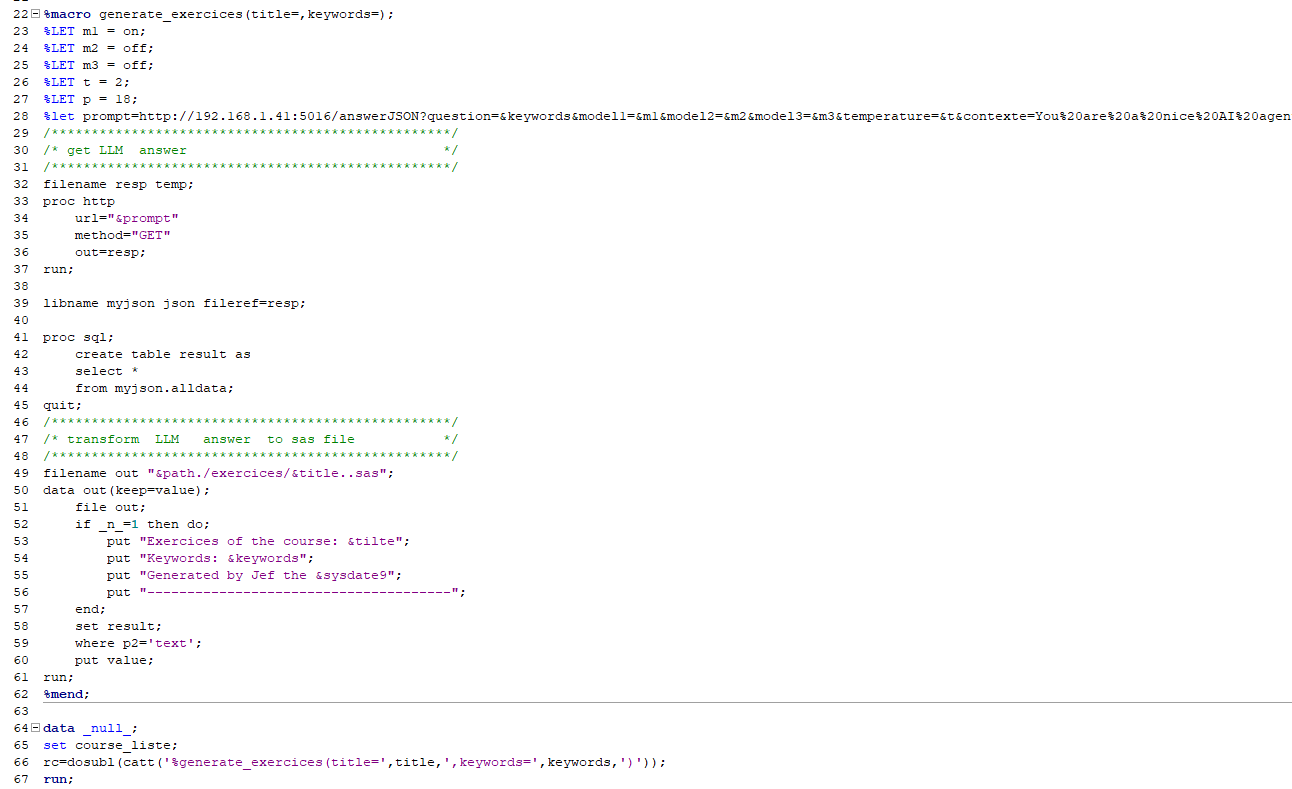

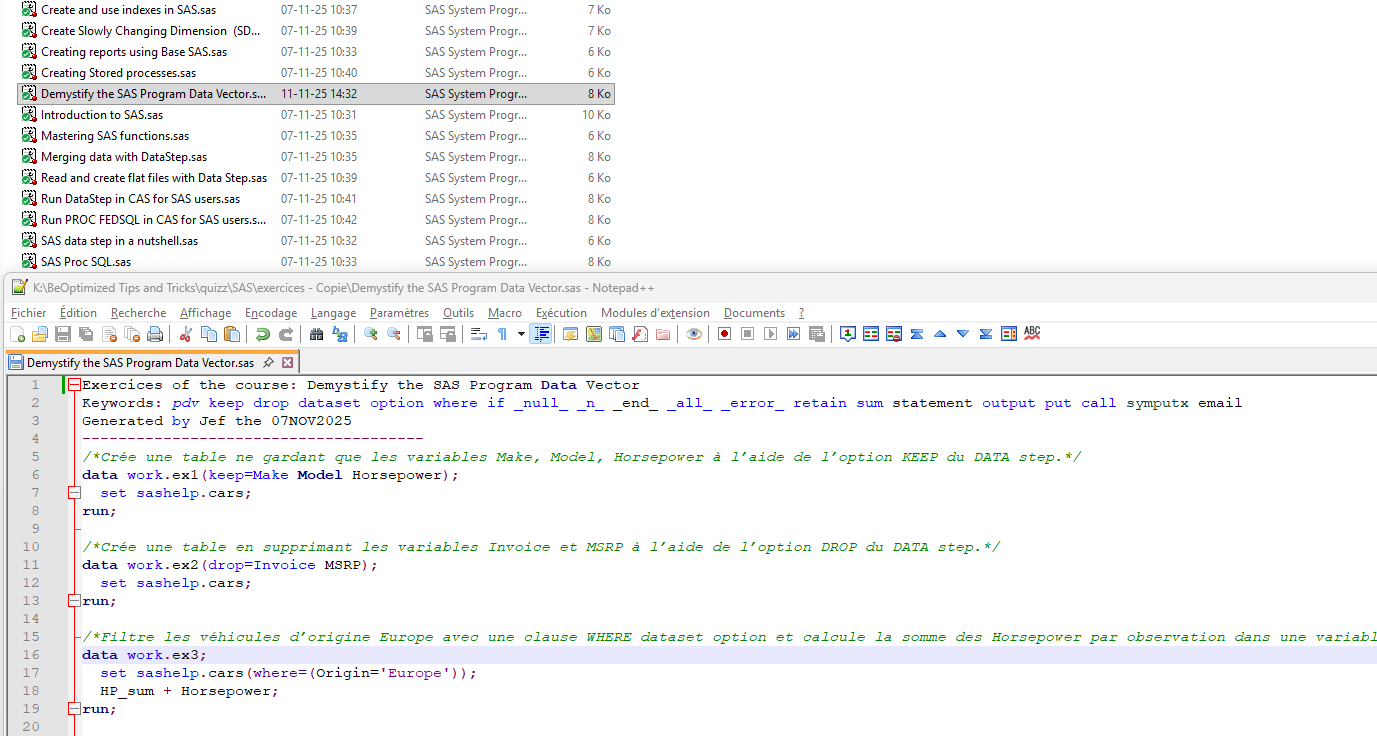

Generate exercises: suppose you are teaching a programming language and have a course list stored in Excel together with keywords. Could we, based on these keywords and a good LLM prompt, generate a list of questions and answers based on a given dataset? That’s precisely what we did in this mini project.

This project was made possible thanks to three books I highly recommend: AI Agents in Action (Michael Lanham) ,

Unlocking Data with Generative AI and RAG (Keith Bourne) and

Data Analysis LLMs (Immanuel Trummer)

Jef was built using FastAPI and is available from everywhere on the local network, This include PROC HTTP from SAS :-) In this presentation, we take the role of a Data Analyst and create SQL queries using LLM.

In this presentation, we try to guess dataset names thanks to the embedding vector technology available in LangChain and Chromadb . Here the 'documents' are the list of SAS dataset labels and the index are the dataset names.

Instead of delivering the result into LLM context, we return the dataset name directly to the user interface thanks to FastAPI and proc http who can decide to use or not the dataset(s).

In this presentation, we are generating 'on the fly' SAS exercices thanks to the ChatGPT5 API.

More precisally, we are calling the Jef FAST API via PROC HTTP and loop through all the tips and tricks catalog of BeOptimized using SAS function.

By continuing to browse the site you are agreeing to our use of cookies. Review our cookies information for more details.